Table of Contents

- What is Hot-Add & Hot-Plug?

- What is Non-uniform memory access (NUMA)?

- Some VMs configuration examples on a Windows Server

- NUMA is enabled, but with only one NUMA node

- How to use VM Hotplug on your vCPU and vRAM?

- Limitations

- Conclusion

When Virtualization was first introduced back in the late 90s and 2000s, mainly VMware, no one dreamed of what we could do today using Virtualization.

Virtualization has evolved a lot, and back then, using Hot Add & Hot Plug on a Virtual Machine was also something no one thought should be possible. In particular, a Guest OS could recognize new hardware online.

Maybe it was expected since this was already possible on physical Servers, and the OS already recognized Hot-Plug devices, like disks, etc. It was a matter of time to Virtualize the Environment to do the same.

What is Hot-Add & Hot-Plug?

It is the ability to add extra devices to your Virtual Machine without powering it off. It is called hot when the VM is powered on.

You can add more Hot-Add vRAM and Hot-Plug vCPU to your Virtual Machines while VM is running to adapt to your VM workloads, and instantly, Guest OS recognize the newly-added vCPU/vRAM and make them usable by your system.

Hot-Add & Hot-Plug is mainly used or known for vRAM and vCPU, but we can add more virtual removable devices using the same feature.

The type of devices you can add using Hot-Add and Hot-Plug:

- Memory

- CPU

- USB devices

- Virtual Disks

- Virtual Network

We can use Hot-Add & Hot-Plug to add the above devices, but the most important are vRAM and vCPU, and this article focuses more on those two.

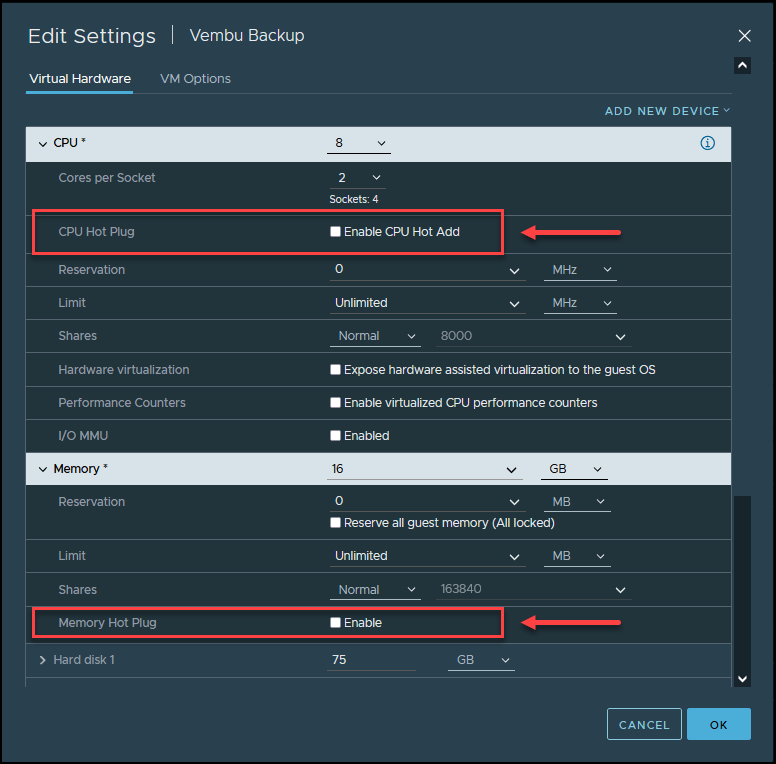

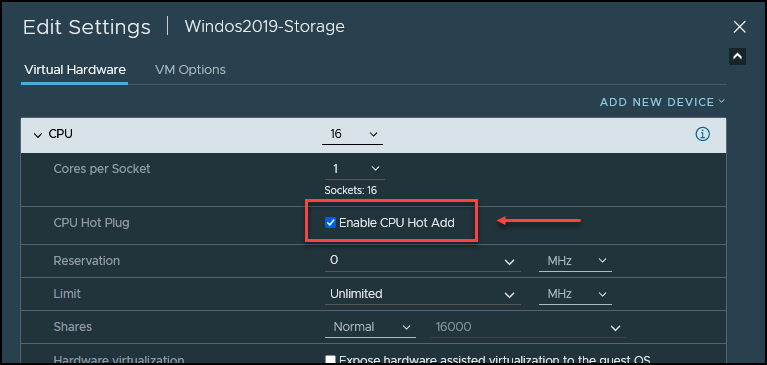

Hot-Add & Hot-Plug are not enabled by default for vRAM and vCPU. You need to enable it on your Virtual Machine when your VM is powered off.

Note: When you try to enable the above options with VM Powered on, they are grayed out.

Always consider the amount of memory in your ESXi and the number of physical sockets to configure your VMs.

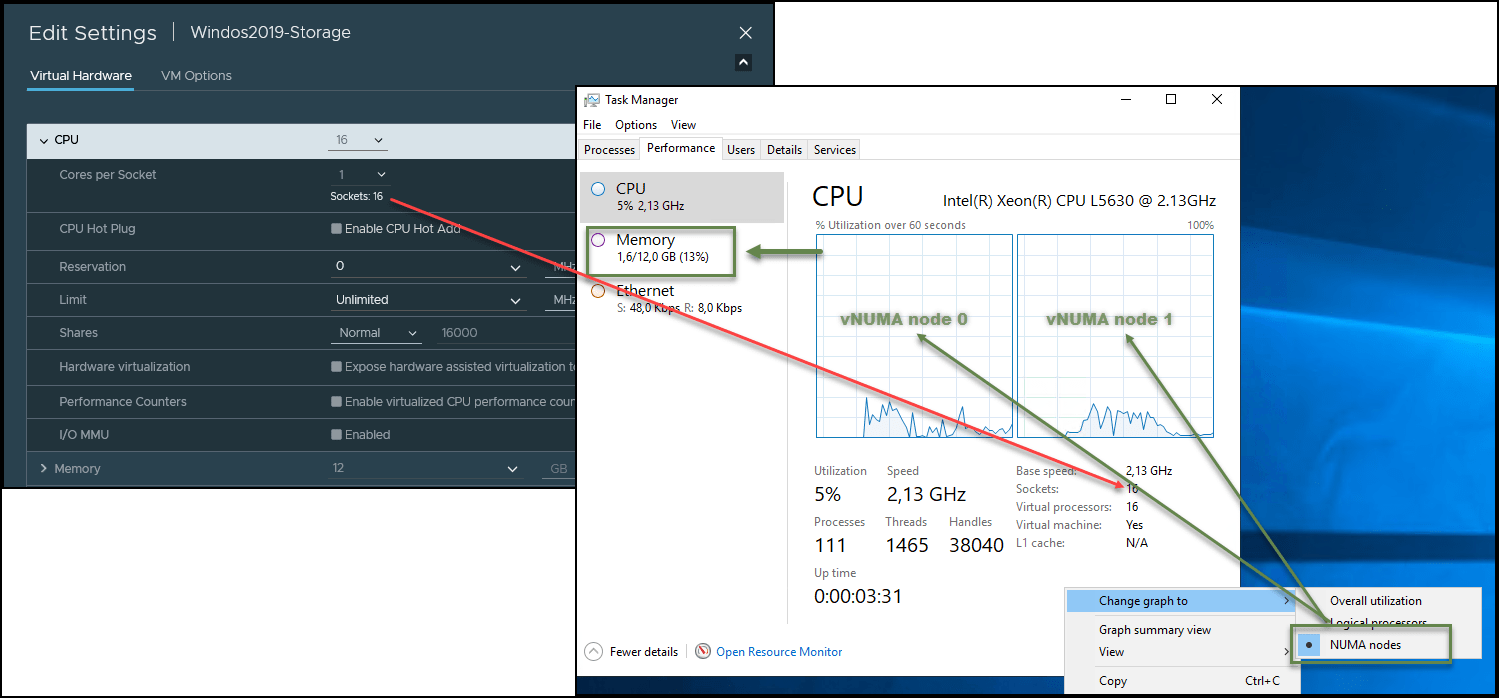

VMware also has a knowledge base support article KB83980 about enabling in Windows VMs. When Enabling vCPU, Hot-Add creates fake NUMA nodes on Windows.

When using Hot-Add & Hot-Plug, CPU and Memory are most important. Because of that, I write a bit about both when using Hot-Add & Hot-Plug and using Non-uniform memory access (NUMA).

What is Non-uniform memory access (NUMA)?

NUMA scheduling needs at least four CPU cores and two CPU cores per NUMA node to work correctly in ESXi.

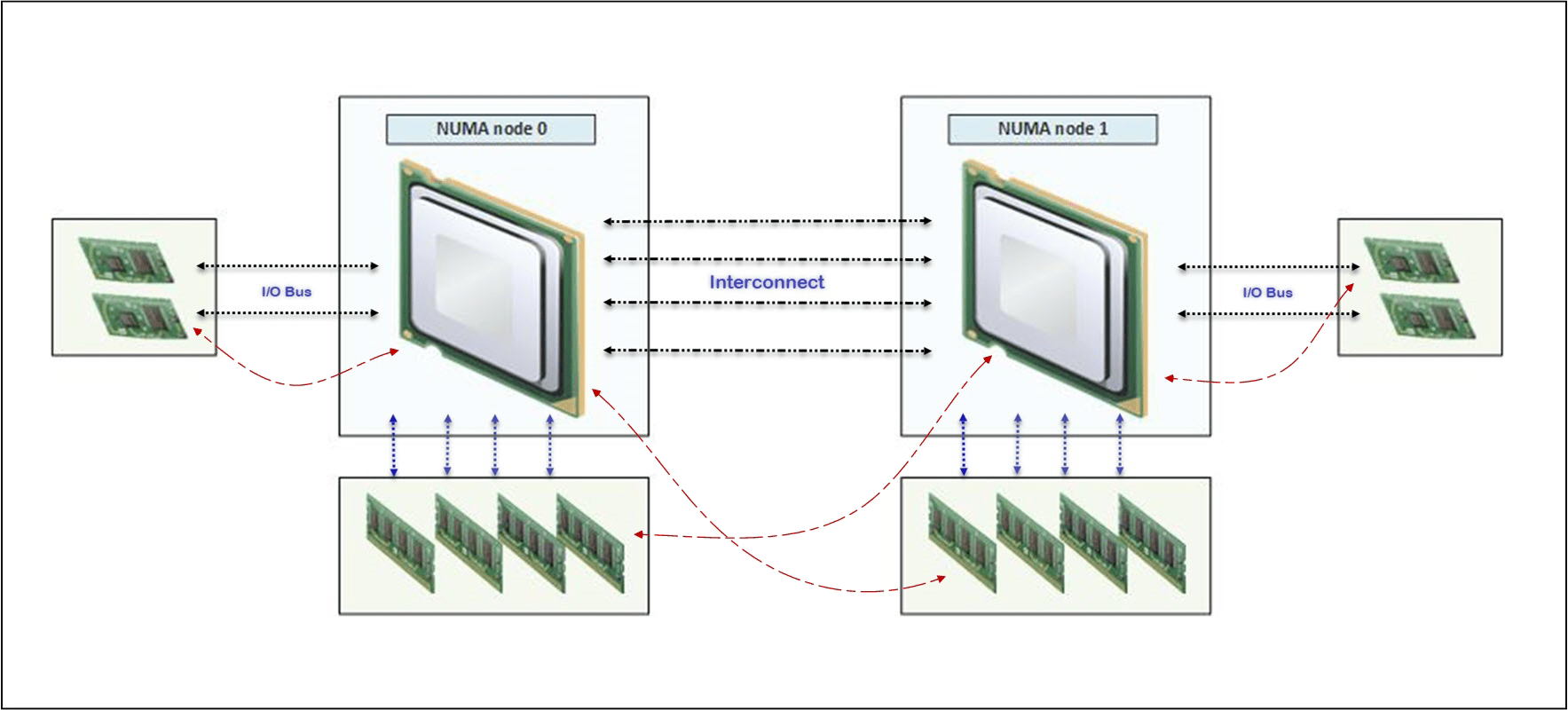

NUMA is designed for multiprocessing memory where memory access time depends on the memory.

Each processor(NUMA node) has its memory allocated and uses a shared bus between nodes for the I/O bus.

With NUMA, when the processor needs to access memory that is not local memory(meaning its own local memory), it is faster than transferring data over NUMA( cross an inter-processor bus).

For example: Between NUMA node 0 and NUMA node 1.

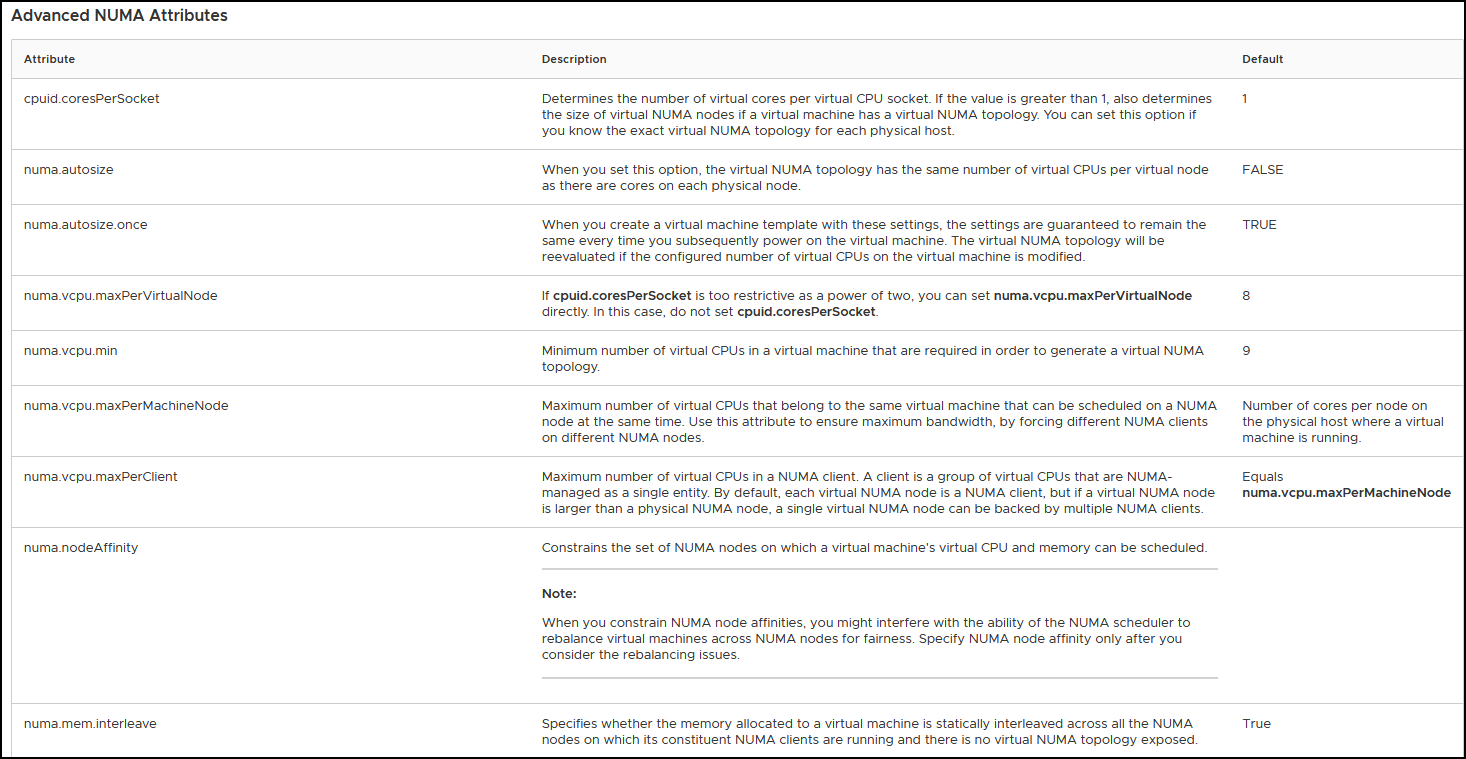

More details and NUMA attributes in ESXi 7.0. Check HERE for all ESXi versions.

We can customize our NUMA settings. Extend not only the maximums but also set the maximum. Check the above URL to understand how to use it.

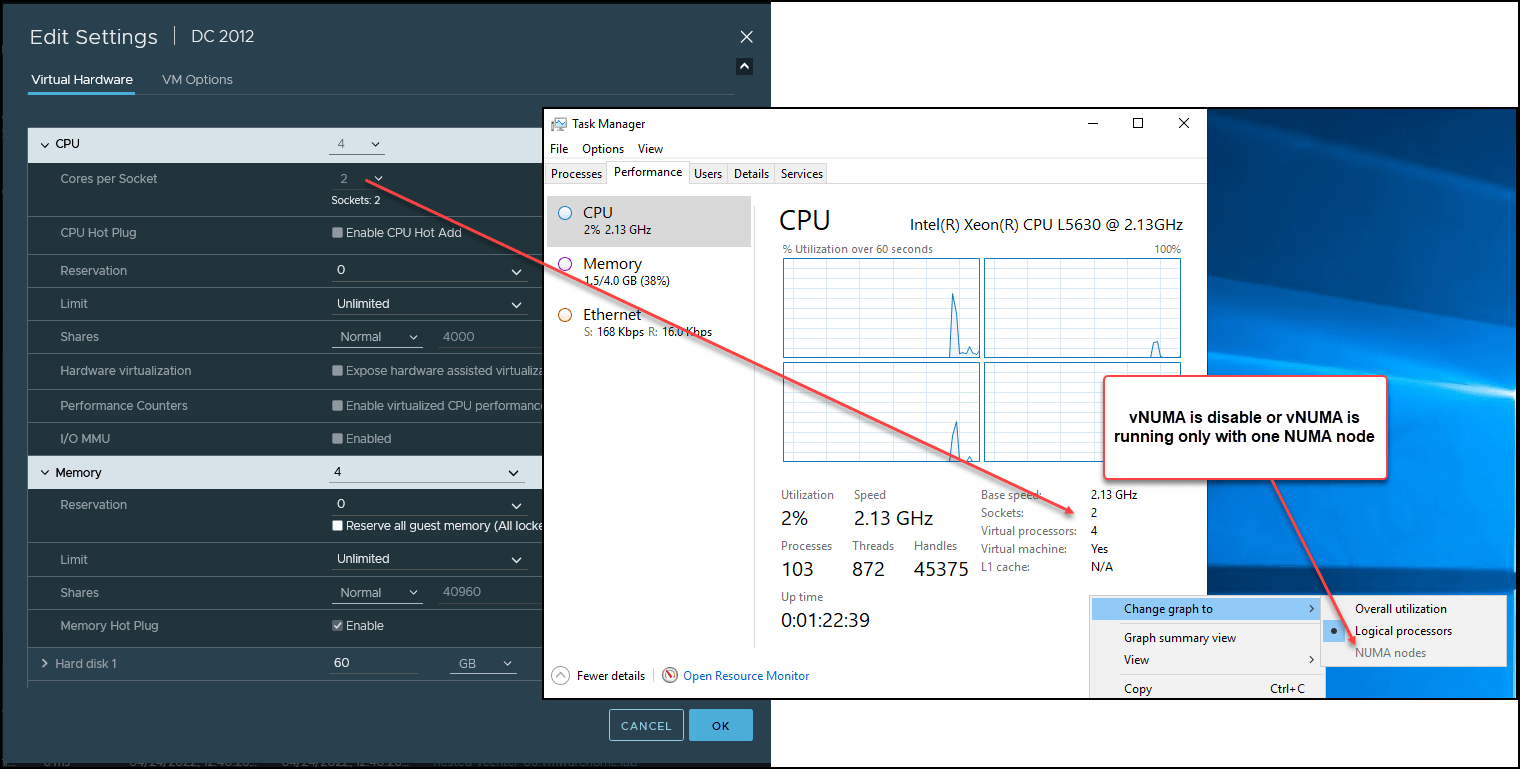

As stated in the beginning, if you enable Hot-plug for VMs, vCPU, NUMA and vNUMA are disabled on your Guest OS and use UMA.

The number of vCPU you select vs Cores per Socket creates the number of vNUMA presented to your Guest OS.

For example, a VM with 12 CPUs vs 12 Cores per Sockets with 12Gb vRAM is 24x vNUMA with 12Gb per vNUMA(the amount of memory your physical server has for each CPU).

The vNUMA memory is always plus the number of NUMA nodes. If you have 12GB of memory in your VM and two NUMA nodes, your ESXi physical memory needs to be at least 24Gb to match your configuration and good performance.

When enabling Hot-Plug for vCPU on your VM, Non-Uniform Memory Access (NUMA) is disabled. And vNUMA is very important for your Guest OS performance, mainly if you use large VMs like Server DBs and Applications Servers.

By enabling Hot-Add & Hot-Plug, your VM/Guest OS loses exposure to your physical NUMA.

If you plan to enable Hot-Plug, you should set your vCPU and Cores per Socket, 1×1. With these settings, you set the vNUMA for one node. Or you need to follow the exact physical topology(CPUs vs Cores) on your ESXi.

Do not rely on Hyperthreading Logical Processors to configure your NUMA in your VMs. Always stick to physical cores(equal or less) when configuring your VM vCPU.

For the safe side, you can add the number of vCPUs in your VM and leave the Cores per Socket by default. Unless you need a particular configuration, this works correctly.

Because the number of vCPU allocated on each vNUMA should be balanced. If not, you could have performance issues.

If you are interested in NUMA vs vNUMa, Frank Denneman from VMware has excellent blog posts about this subject. Check here the latest one for vSphere 7.0

Some VMs configuration examples on a Windows Server

First example: Not enable, or use only one NUMA node.

Run the following command in ESXi to check if NUMA is enable on each VM:

vmdumper -l | cut -d \/ -f 2-5 | while read path; do egrep -oi “DICT.*(displayname.*|numa.*|cores.*|vcpu.*|memsize.*|affinity.*)= .*|numa:.*|numaHost:.*” “/$path/vmware.log”; echo -e; done

NUMA is enabled, but with only one NUMA node

numaHost: NUMA config: consolidation= 1 preferHT= 0 partitionByMemory = 0

numaHost: 1 VCPUs 1 VPDs 1 PPDs

numaHost: VCPU 0 VPD 0 PPD 0

NUMA is enabled with two NUMA nodes:

To make sure if your VM is using NUMA and have the NUMA node vCPUs balanced in your ESXi, you can run the ESXi command: sched-stats -t numa-clients

This command shows all VMs and how many NUMA nodes and vCPU are running per VM.

[root@ESXi01:~] sched-stats -t numa-clients

| groupName | groupID | clientID | homeNode | affinity | nWorlds | vmmWorlds | localMem | remoteMem | currLocal | cummLocal |

|---|---|---|---|---|---|---|---|---|---|---|

| vm.1068813 | 133495 | 0 | 0 | 3 | 16 | 16 | 12626032 | 0 | 100 | 100 |

| vm.1068813 | 133495 | 1 | 1 | 3 | 16 | 16 | 5191752 | 0 | 100 | 10 |

In the above example, VM vm.1068813 runs with 2 NUMA nodes(homeNode) and has 16 vCPUs on each NUMA node(nWorlds). So it has a NUMA node vCPU balanced.

You have an unbalanced vCPU NUMA node when the vCPU and Cores per Socket are not correctly set.

In this last case, we have a VM with the vCPU Hot-plug enabled:

[root@ESXi01:~] sched-stats -t numa-clients

groupName groupID clientID homeNode affinity nWorlds vmmWorlds localMem remoteMem currLocal cummLocal

numaHost: NUMA config: consolidation= 1 preferHT= 1 partitionByMemory = 0

numa: Hot add is enabled and vNUMA hot add is disabled, forcing UMA.

numaHost: 16 VCPUs 1 VPDs 2 PPDs

As we can see in the above example, vNUMA is disabled, VM is using UMA, and we have 1 virtual proximity domain (VPD) and 2 Physical proximity domains (PPD) when we should have 2×2.

VM is unbalanced with the physical and the virtual NUMA nodes. This causes the memory processes to take longer and degrade VM performance.

How to use VM Hotplug on your vCPU and vRAM?

Requirements:

- Hot-Add enable on Virtual Machine

- Virtual Machines compatible with ESXi 5.0 and later and minimum hardware version 7

- Hot-Add & Hot-Plug is not compatible with Fault Tolerance

- vSphere Advanced, Enterprise, or Enterprise plus

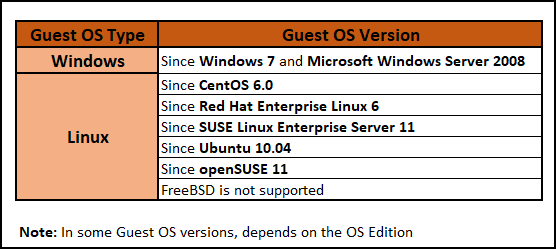

Hot-Add needs to be enabled in VMware, but most essential needs to be supported in the Guest OS.

Next is a small list of the Guest OS versions where Hot-Add & Hot-Plug is supported.

Check VMware KB2051989 for Hot Add Memory and Hot Add vCPU on different Windows editions.

Advantages:

The main advantage is to change devices in your VM without downtime. You can add vRAM and vCPU to your VM without the need to power off your VM.

Disadvantages:

There are significant disadvantages to enabling vCPU Hot-Plug because of CPU vNUMA.

Problems with vNUMA are well documented. When you enable Hot-Add for vCPU, vNUMA is automatically disabled. This means that Guest OS does not know how many CPUs are running on your VM.

After you add vRAM or vCPU, you cannot reduce them. So use it carefully so that you don’t overload your Hypervisor.

CPU affinity also disables NUMA.

Limitations

- VM Hot-Add memory maximum is 16 times the original memory size. Example: 4Gb x 16 = 64Gb

- Linux VMs need to have a minimum of 4Gb of memory to add 16 times Hot-Add memory. If it is less(3Gb), it is only possible to Hot-Add to a maximum of 32Gb

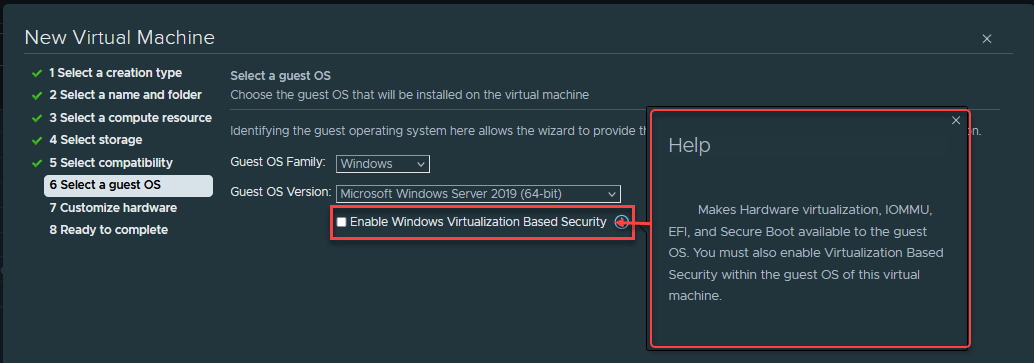

- Hot-Add vRAM and vCPU work for Windows virtual machines when Virtualization Based Security (VBS) is enabled in the OS. To add vRAM or vCPU, you need to power off VM

- In some cases, your VMs have performance and vCPU number limitations when enabling vCPU Hot-Add

For example, when creating a VM in VMware.

Conclusion

As we can see above, we can use Hot-Add & Hot-Plug for several devices, mainly vCPU and vRAM.

Enabling Hot-Plug on the vRAM is not very problematic, but regarding vCPU then, we have many issues with NUMA/vNUMA.

That is why it is crucial to understand how NUMA works and then decide if it is necessary to enable the vCPU Hot-Plug.

In my opinion, enabling Hot-Add & Hot-Plug for vCPU should only be done if we have a VM running some processes that we may need to add additional resources when there is an increase in workloads, and it is not possible to Power off the VM to perform this task. And if it is a Database Server or Applications Server with huge workloads, I do not recommend enabling this.

If this is not the case, you should leave the Hot-Add & Hot-Plug disabled (by default), and if you need to increase vCPUs on your VM, Power off and then change the vCPUs number.

Follow our Twitter and Facebook feeds for new releases, updates, insightful posts and more.