Quick Bites:

- The blog post provides a comprehensive comparison between Microsoft’s NTFS and ReFS file systems

- It discusses the key features, performance, reliability, and scalability of each file system. NTFS, a long-established file system, excels in performance and supports features like compression and encryption

- ReFS, introduced with Windows Server 2012, focuses on data integrity and resilience, featuring automatic error correction and improved performance for virtualized workloads

- The article also highlights the adoption of ReFS in Windows Server 2016 and 2019, noting its suitability for specific use cases like Storage Spaces Direct (S2D) but cautioning against its use with Cluster Shared Volumes (CSV) due to performance issues

When it comes to the latest versions of Microsoft Windows Server, there have been many new feature improvements and new technologies added. One of the areas receiving vast improvements in recent Windows Server versions is storage. This includes introducing a new software-defined storage technology as well as the introduction of a new file system.

Table of Contents

- What is Resilient File System (ReFS)?

- What is NTFS?

- NTFS vs ReFS – Comparing the Features

- Performance

- Reliability

- Scalability

- NTFS vs ReFS – Overall Comparison

- Windows Server 2016 Hyper-V NTFS vs ReFS

- Wrapping up NTFS vs ReFS Comparison

The NTFS file system has been the staple of Windows Server operating systems since Windows NT 3.1 which was introduced back in 1993. As Microsoft has done with many aspects of Windows Server, they saw the need to improve upon the NTFS file system by introducing a brand-new file system called ReFS or Resilient File System.

In this post, we’ll look at the following:

- What is ReFS?

- What is NTFS?

- A general overview of comparing NTFS with ReFS along with features and functionality

- Difference between Windows Server 2016 Hyper-V NTFS vs ReFS

What is Resilient File System (ReFS)?

Microsoft’s new Resilient File System, ReFS, codenamed “Protogon” was introduced in Windows Server 2012 and represents the newest file system used by Microsoft’s Windows operating system. As you can tell from the name of the file system, Resiliency is one of the core features of this new file system.

In fact, with ReFS, there is no chkdsk utility of sorts that you run to repair file system errors as these are automatically corrected via processes that run by default with ReFS. When ReFS reads and writes data to disk, it has a built-in process that analyzes the checksum of the data to ensure there is no data corruption.

Aside from the process that checks the integrity of files as they are read or written with disk I/O, there is an automatic file scanner that runs periodically to detect corruption as well. Microsoft calls this the scrubber operation. If data corruption is detected by this automatic file integrity scan, the corrupted data is automatically repaired. All of this is done while the disk is online and no reboots are required for the integrity checks and repair operations.

Another feature of the ReFS file system is known as copy on write functionality. When you perform simple operations such as changing a file name, ReFS creates a new copy of the file’s metadata. Only when the new metadata is completely written, ReFS changes the pointer from the file to the new file metadata including the new name.

The copy on write functionality helps to prevent common data corruption scenarios involving power loss or other unclean shutdowns of a system when these types of file operations have not fully completed. By using the copy on write workflow, ReFS greatly minimizes the chance of corruption due to these types of issues.

What is NTFS?

NTFS is the longstanding file system that has been around since the days of Windows NT 3.1. With NTFS, Microsoft successfully implemented the ability to apply granular permissions to files. This allowed effective security permissions by way of an Access Control List (ACL) to be implemented for security access purposes.

Other powerful enterprise features were made possible by way of NTFS such as BitLocker encryption, compression, deduplication, disk quotas, storing Hyper-V virtual disks, as well as storage technologies like Cluster Shared Volume (CSV).

Even though ReFS maybe Microsoft’s newest and, in many ways, superior file system, NTFS is still very much the de facto file system standard in Windows Server. NTFS is still the default file system for bootable volumes, booting all of today’s current versions of the Microsoft Windows operating system.

NTFS vs ReFS – Comparing the Features

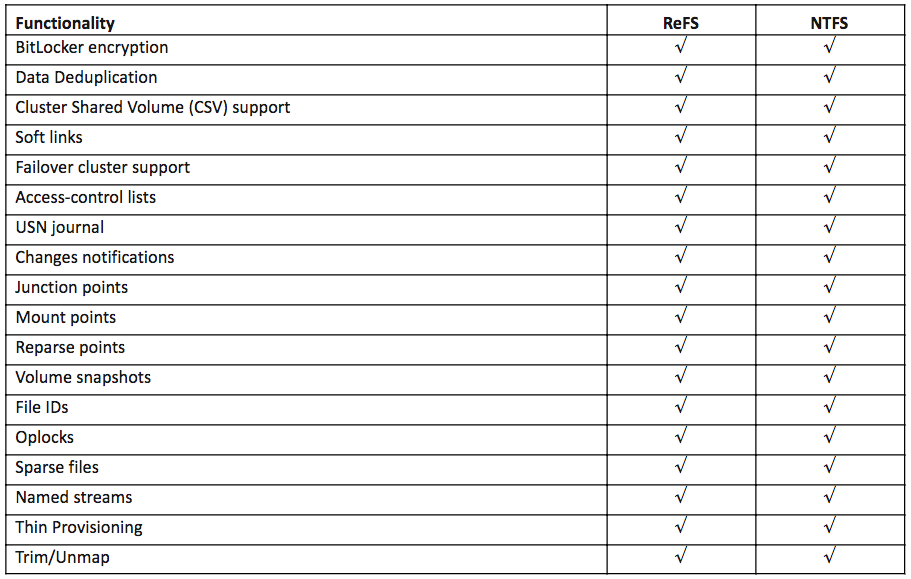

When comparing NTFS and ReFS as file systems that can be utilized for today’s modern workloads, both are extremely powerful file systems that provide enterprise-level features for both physical and virtual workloads. Microsoft used NTFS as a starting point for developing ReFS, so it stands to reason that ReFS contains features that are not included with NTFS. However, there are also features that are contained in NTFS that are not contained in ReFS.

One of the challenges with ReFS has been issues early on with the implementation of ReFS. It has undergone several growing pains throughout the various versions of Windows Server. This has led to very slow adoption of the new file system for production workloads.

Early on, one of the limitations of ReFS was the lack of deduplication and compression. This was true even with the Windows Server 2016 release. However, with the release of Windows Server 2019 ReFS, it now includes deduplication and compression functionality. As of Windows Server 2019, ReFS has truly come of age and now contains the features and functionality that many require for their enterprise environments.

To begin with, let’s compare the following key areas of both file systems and see how they stack up:

- Performance

- Reliability

- Scalability

Performance

When it comes to general file-level performance comparing NTFS and ReFS, NTFS is still the king of all-out performance of production workloads. When using ReFS, there is overhead to file operations due to the built-in integrity check operations. This can affect I/O performance.

Today’s modern Microsoft software solutions such as Exchange Server, ReFS is still not recommended carte blanche. Microsoft outlines the following guidance in regards to using ReFS with Exchange Server.

- “Best practice: Data integrity features must be disabled for the Exchange database (.edb) files or the volume that hosts these files. Integrity features can be enabled for volumes containing the content index catalog, provided that the volume does not contain any databases or log files.”

NTFS includes several built-in performance-enhancing technologies that are included with the file system by default. These include:

- Configurable cluster sizes – Allow adjusting of various cluster sizes depending on the type of workload

- Defragmentation of both standard files and the Master File Table – moving files where they can be accessed efficiently improves performance

- Compression – compressing and reducing the file sizes can help with performance and capacity

- Configurable naming conventions – You can adjust the naming conventions which can increase performance in NTFS

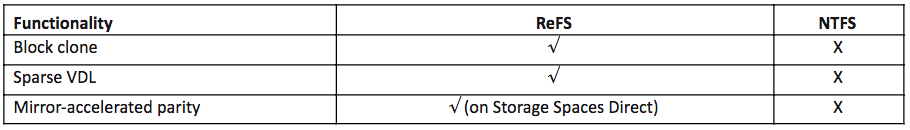

While ReFS may fall short when competing for head-to-head with NTFS in regards to general workloads, virtualization performance is where ReFS shines. Microsoft has engineered ReFS with several features that increase the performance of virtualized workloads and virtualization operations. Virtual performance-enhancing technologies included with ReFS include the following:

- Block cloning – With block cloning, copy operations are performed as low-cost metadata operations rather than actually reading and writing file data. This dramatically improves and increases the performance of checkpoint merge-operations. With block cloning, it also means the same identical data is not stored multiple times.

- Sparse VDL – Sparse VDL allows ReFS to perform the zeroing of files extremely quickly. Operations like creating fixed-size VHD(X)s only take seconds instead of perhaps minutes.

Reliability

When considering the differences between NTFS and ReFS, reliability is a key area of comparison.

By its very nature, ReFS is built for resilience and reliability. As we have already touched on, there are many built-in mechanisms in ReFS that are designed to ensure data corruption is prevented.

These reliability mechanisms include:

- Copy on write – File metadata changes are first written to a copy of the file metadata and then the pointer is changed. This helps to prevent corruption due to power loss or some other unexpected system failure during the operation.

- Scrubber operation – A process that is scheduled to run that checks for corruption and corrects any data corruption it finds

- In-line file integrity checks – As data is read from and written to disk, ReFS examines the checksum of the data for data corruption

What about NTFS? Most IT admins have used the chkdsk utility for finding and repairing data corruption with NTFS formatted volumes. However, you might not be aware that in Windows Server 2008, Microsoft introduced self-healing NTFS that now automatically attempts to correct data corruption. This is a much more “ReFS-like” approach.

There were new NTFS kernel code improvements made with the NTFS kernel code that allows it to correct disk inconsistencies using this feature and without negative system impacts. Self-healing NTFS brings about many great benefits including:

- Provides continuous availability – chkdsk no longer needs to run in most cases requiring exclusive access to the disk

- Data is preserved – Data can more easily be salvaged that has experienced corruption

- Better reporting – Allows the system to capture self-healing operations

Recovers boot volumes that are readable – Boot volumes can be identified by self-healing NTFS whether or not it can be recovered - Preserves critical system files – System files can be healed that are critical to system functions, booting, etc

While ReFS is purpose-built for resiliency and reliability, NTFS has come a long way in modern Windows Server releases to employ better and more effective ways to preserve data that may be affected by data corruption and do this in less intrusive ways and automatically.

Scalability

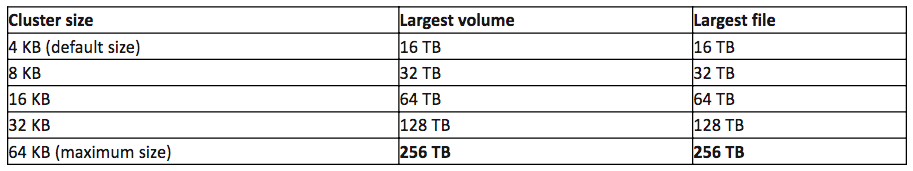

When it comes to pure scalability, ReFS cannot be equaled by NTFS. NTFS allows for the following:

However, with ReFS, the maximum file and volume size is 35 Petabytes. When it comes to pure scalability, ReFS is the obvious choice for storing extremely large amounts of data.

NTFS vs ReFS – Overall Comparison

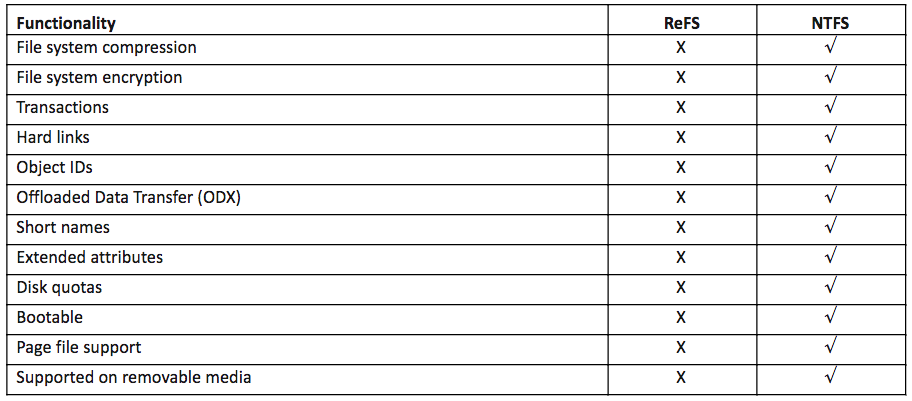

Below is a detailed feature comparison of features found in both NTFS and ReFS, features found only in ReFS, and finally features found only in NTFS.

Features in NTFS and ReFS:

Features in ReFS and not NTFS:

Features in NTFS but not ReFS:

Windows Server 2016 Hyper-V NTFS vs ReFS

Now that we have a general overview of comparing NTFS with ReFS along with features and functionality, let’s narrow down to a comparison of the two file systems in relation to Windows Server 2016 and Hyper-V. When you compare the two in this context, there are several key points to note.

When considering ReFS in the realm of Windows Server 2016, this means that you do not have access to deduplication and compression when using ReFS. These features were introduced in Windows Server 2019. Hyper-V workloads such as VDI workloads in Windows Server 2016 will not be able to benefit from the tremendous space savings benefits of using deduplication with ReFS.

There are a few other key takeaways and important points to note when comparing NTFS and ReFS when using Hyper-V in Windows Server 2016. When running Hyper-V with Windows Server 2016, you will most likely be using one of two storage configurations. Most will set up either a traditional storage configuration with shared storage connected to a Hyper-V cluster, or you will be using a Storage Spaces Direct (S2D) configuration making use of software-defined storage.

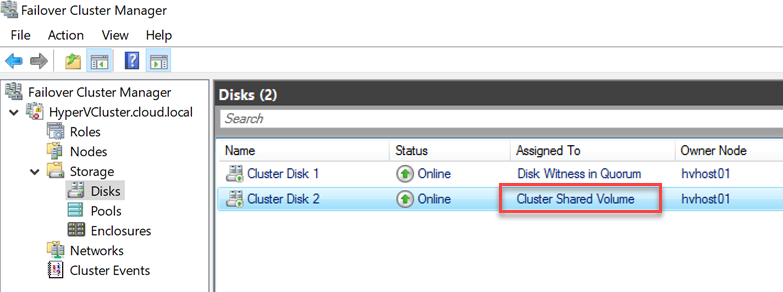

With traditional storage solutions with shared storage, you will configure Cluster Shared Volumes (CSV). Cluster Shared Volumes allow multiple Hyper-V hosts to access the same files on a storage solution at the same time. In the CSV configuration, NTFS is the preferred file system. Why?

When using CSV storage configurations formatted with ReFS, the storage solution always runs in file system redirection mode. What this means is all I/O is sent over the cluster network to the coordinator node chosen by the Hyper-V cluster. This can cause serious performance issues.

In contrast, with Storage Spaces Direct (S2D), ReFS is the preferred file system. With S2D and ReFS, there are no performance impacts due to the use of RDMA network adapters which offload the performance impact of S2D network traffic.

What is RDMA?

RDMA is remote direct memory access. It is basically zero-copy networking which enables the network adapter to transfer data directly to or from application memory, eliminating the need to copy data between application memory and the data buffers in the operating system. This bypasses any bottleneck that may be imposed by the CPU, caches, or other hardware in between. When utilizing the RDMA network adapters with Storage Spaces Direct there is no shared storage as such so all access is essentially redirected across the nodes utilizing the power of the RDMA network adapters.

With the performance limitations of using CSV with ReFS, NTFS is the recommended file system when using Hyper-V and Cluster Shared Volumes (CSV). However, with S2D, ReFS allows taking advantage of all the great virtualization performance benefits provided by ReFS, including Sparse VDL and block cloning, without the performance impacts.

Wrapping up NTFS vs ReFS Comparison

Both NTFS and ReFS are powerful file systems in the realm of Windows Server. Each has its pros and cons. NTFS has certainly been around for considerably longer and has a longer list of features and supported configurations. Even though ReFS is newer, there are certain limitations to be aware of, especially in Windows Server 2016.

If you are planning on making use of ReFS for production environments, Windows Server 2019 deployments will be much preferred over Windows Server 2016 as important features like deduplication and compression were introduced in Windows Server 2019.

Also, be aware of the limitations when using ReFS with Cluster Shared Volumes (CSV) in Windows Server Hyper-V. ReFS is only supported for use with Storage Spaces Direct (S2D) due to the file redirection that takes place with CSV and ReFS.

BDRSuite Protects Your Data Efficiently and Effectively

No matter what your storage configuration, BDRSuite efficiently and effectively protects your storage environment with robust enterprise features that allow you to align with backup best practices.

Incremental Backups with Changed Tracking

Using Hyper-V Resilient Change Tracking, Vembu ensures your backups are efficient and copies only data that has changed since the last backup. This ensures that your backups require less storage and less time to complete.

Application-Aware Backups

Vembu protects not only your data but also your applications. Using application-aware backups, Vembu makes sure the data that powers your business-critical applications stays consistent.

Security and Compliance Focused

Vembu uses industry-standard security by encrypting your backups both in-flight and at-rest. In addition, the VembuHIVE file system provides an ultra-secure and efficient way to access your backups for recovery. Advanced retention configurations allow easily meeting all your compliance and regulatory needs.

Download a fully-featured trial version of BDRSuite here.

Related Posts

Configuring Storage Spaces Direct and Resilient File System (ReFS)

Follow our Twitter and Facebook feeds for new releases, updates, insightful posts and more.

You said “It is best practice with Hyper-V to format the volume with 64 KB block size for optimal performance”. You mean when you create a Virtualdisk/CSV volume in Storage spaces direct/ hyper-converged cluster scenario, you still recommend 64K as opposed to 4K with off course ReFS?

There is good guidance here officially from Microsoft: https://blogs.technet.microsoft.com/filecab/2017/01/13/cluster-size-recommendations-for-refs-and-ntfs/. MSFT steers towards 4K for general best practice, however, 64K clusters are appropriate for large, sequential IO workloads. MSFT also says, “there are many scenarios where 64K cluster sizes make sense, such as: Hyper-V…”. In other words, you will benefit in space savings for 4K cluster size, but in my testing for performance, 64K has an added benefit. Additionally, you should always test in your specific environment with specific workloads to determine the best option as different use cases may benefit from different approaches.

Hmmm. I liked the looks of ReFS and after some basic testing found I/O performance to be slightly faster than NTFS on the same disk subsystem. But it doesn’t seem to play well with RAID controllers, or more specifically write caching, at least in my experience. I’ve seen Hyper V servers unexpectedly reboot or shutdown with NTFS partitions that have been fine afterwards, but although it’s a rare event: every single one with a ReFS partition suffered some sort of irreparable corruption; including loss of the entire ReFS partition. A BBU for the controller cache doesn’t seem to help: the only solution I’ve found so far seems to be disable write caching entirely, or stick to NTFS. Am I missing something?

CueBall,

I think ReFS is still new enough, especially with Windows Server 2016 that sticking with NTFS might be a good bet until ReFS comes of age. I have heard similar reports from other users besides yourself with ReFS issues. Microsoft is making efforts to improve it as we see in Windows Server 2019 with additional features and functionality being added. With each new release of Windows Server moving forward, we will most likely see most of the issues with ReFS get resolved.