Quick Bites:

- Performance: Close, maybe slight edge to iSCSI, especially for high IOPS

- iSCSI: More complex setup, better load balancing & RDM support, LUN snapshots

- NFS: Easier to configure, simpler recovery from power failures, size adjustments possible

- Security: NFS v4.1 has encryption and multipathing. iSCSI needs external measures

- Choice: Depends on needs and environment. All-flash? Skip NFS. Need clustering? Consider iSCSI

The discussion between Storage iSCSI or NFS for hypervisor environments has been discussed since both were available for virtual environments.

The following article will focus on the best option to run iSCSI or NFS in a VMware environment, not other hypervisors or other systems.

What is the best choice? which performs best? Which is the best to manage?

It depends on your environment and the type of data you are using. But the cost, performance, availability, and ease of manageability are the topics to consider when selecting the Storage protocol to use in your VMware environment.

A Virtual Machine can work on both with almost the same performance. But if you need to use extra volumes like Shared Folder to hold your ISO, backups files, or a shared folder Cloud Director, for example, NFS needs to be your choice.

Table of Contents

- Some details about iSCSI and NFS

- In VMware, what are the differences?

- iSCSI

- iSCSI Limitations

- NFS

- Basic quick performance tests on iSCSI and NFS datastores

- Conclusion

iSCSI arrived later(2003) to market, and most Storage vendors that were using or had built their Storage Systems based on Network-attached Storage (NAS), adapted their systems to hold also iSCSI(like Netapp).

But as the market changed and the technology, the new Storage Systems, like the All-Flash Systems, were designed and built to work as a Block Storage System.

Some details about iSCSI and NFS

iSCSI and NFS can run over 1Gb or 10Gb or higher, TCP/IP networks, and use Jumbo Frames(MTU 9000) frames.

Overhead on a host CPU (encapsulating SCSI and file I/O into data into TCP/IP packets) can happen when using iSCSI or NFS since both uses Network interfaces.

Regarding Load Balancing, iSCSI and NFS work differently.

NFS v3.0 doesn’t support multipathing. To use multipathing in your NFS environment, you need to use NFS v4.1. When using NFS multipathing, you can use LACP or nic Teaming Teaming in your vCenter vDS switches.

There are still some limits to the NFS multipathing and load-balancing approaches that can be taken. Although there are two connections when an NFS datastore is mounted to an ESXi host (one connection for control, the other connection for data), there is still only a single TCP session for I/O.

In iSCSI, the multipathing in ESXi hosts is done at the VMkernel level by using Port Bidding for each VMkernel created for your iSCSI connections. Round-robin (RR) path selection policy (PSP) distributes the load across multiple paths to the iSCSI target.

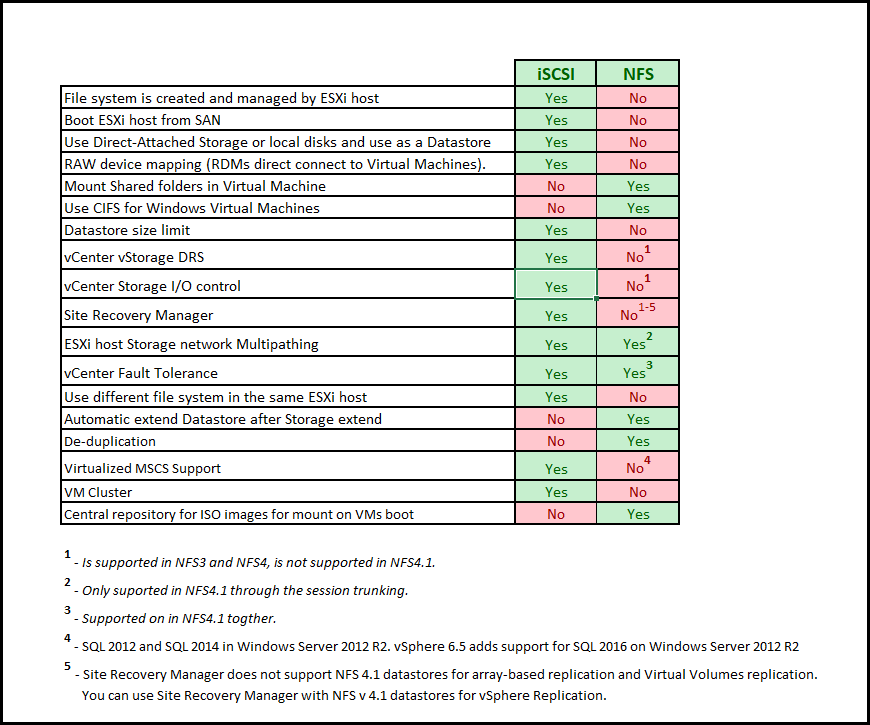

In VMware, what are the differences?

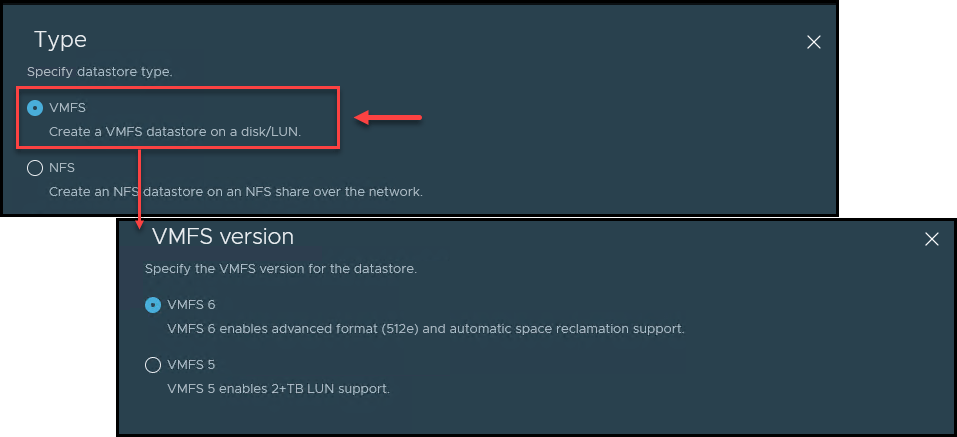

Most of the above differences in VMware are not because of iSCSI but the VMware file system VMFS. NFS cannot be formatted in a VMFS Datastore, only iSCSI, FCoE, or FC. Because those are block-based storage protocols and by creating one storage block at a time, we have a storage area network (SAN) Datastore.

The main difference between these protocols is that iSCSI is block-based, and NFS is a file-based storage protocol.

iSCSI

iSCSI presents block devices to your ESXi hosts and is created as a VMFS Datastore, and iSCSI SAN uses a client-server architecture.

In iSCSI architecture, rather than accessing blocks from a local disk, I/O operations are carried out over a network using a block access protocol.

The server is known as an iSCSI target. Typically, the iSCSI target represents a physical storage system on the network. In the case of iSCSI, remote blocks are accessed by encapsulating SCSI commands and data into TCP/IP packets.

iSCSI Initiators and iSCSI Targets are central in the client-server architecture. iSCSI client is called the iSCSI initiator(hardware or software), and the iSCSI server is called the iSCSI Target.

iSCSI works using Pluggable Storage Architecture (PSA) and provide a. Better load distribution with PSP_RR is achieved when multiple LUNs are accessed concurrently.

For security, iSCSI can use user(name) and password for the Target when using Challenge Handshake Authentication Protocol (CHAP). Using CHAP iSCSI makes sure that Initiator and Target trust each other.

Since the beginning, the ESXi host can only support 8 (8!) paths for iSCSI, but since ESXi 7.0 U2, this limit is now 32 paths.

iSCSI Benefits

To connect your iSCSI to an ESXi host, you need an iSCSI initiator. It can be a software initiator(most commonly used) or a hardware initiator(needs an HBA).

Note: To boot from SAN using iSCSI, and if you are using software iSCSI adapter, you need a network adapter that supports the iSCSI Boot Firmware Table (iBFT) format.

You can use iSCSI to create Raw Device Mapping – RDM(direct SAN virtual disks) virtual disks on your Virtual Machines.

The RDM contains metadata for managing and redirecting disk access to the physical device. With the RDM, a virtual machine can access and use the storage device directly.

This type of disk is needed and crucial in some cases, like Multi-server clustering, SAN Aware Applications, or even physical-to-Virtual clustering cases.

LUN snapshots for your VM Backups. When using iSCSI LUN, you can use your backup tool to do LUN Snapshots.

iSCSI Limitations

Regarding limitations, iSCSI has fewer limitations on VMware than NFS. Mainly because iSCSI uses a VMware VMFS file system.

One of the worse issues I have seen with iSCSI is when ESXi loses connections to their iSCSI Storage, and we have APD. Even this is just a timeout, the ESXi host sometimes freezes, and we cannot do anything, and the host needs a reboot. This cal also happens in an NFS environment, but the consequences are less severe, and after the NAS array is back, Virtual Machines are back and accessible.

More complex and time-consuming to configure. We need to create a VMkernel, initiator, do port bidding using those VMkernel, and then configure access to each iSCSI IP server.

When using thin disks on your Virtual Machines, using iSCSI thin disks can be more difficult to recover your data when there is a power failure.

It is not possible to reduce the size of an iSCSI/VMFS volume.

NFS

NFS presents file devices over a network to your ESXi by mounting the NFS Share Folder and creating an NFS Datastore.

The NFS server/array makes its local file systems available to ESXi hosts. ESXi hosts access the metadata and files on the NFS array/server using RPC-based protocol.

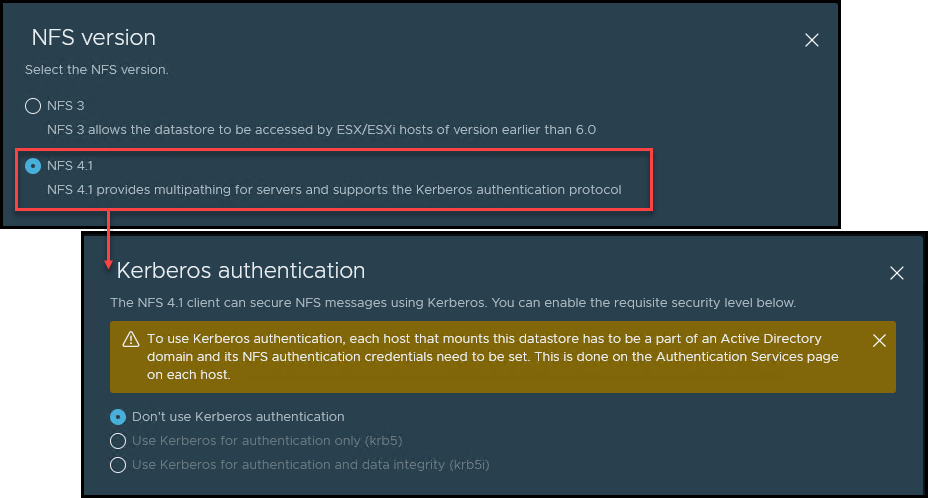

NFS can use Kerberos for host authentication, but hosts need to be part of an Active Directory domain. With this, NFS uses host-based authentication.

In VMware, only NFS v4.1 can use this authentication with cryptography mechanisms in addition to the Data Encryption Standard (DES).

NFS v3 is less secure, and This is why we should implement extra security measures to secure the traffic between the NAS arrays and ESXi hosts. Like using Kerberos, VLANs, and a dedicated network.

NFS in ESXi hosts should use vSphere API for Array Integration (VAAI) primitives for better performance. Using VAAI ESXI on NFS can use Fast File Clone, Reserve Space, and Extended Stats (NAS).

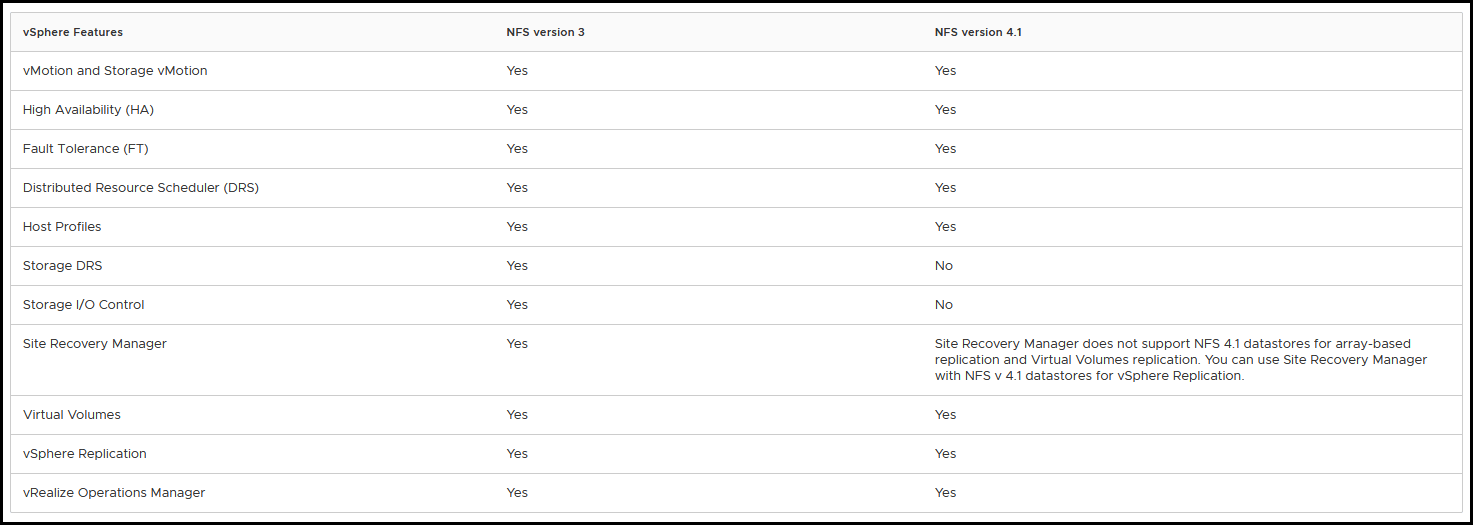

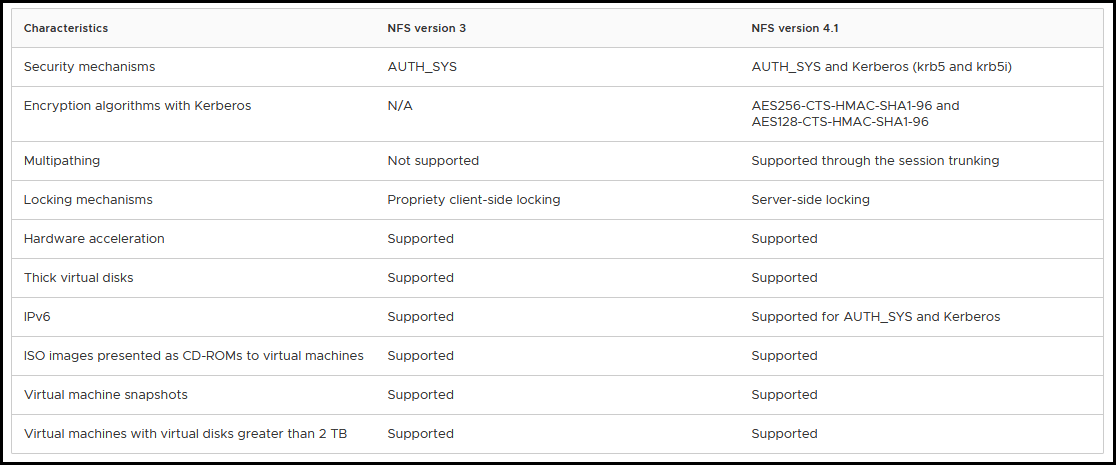

The following images show the most supported features in ESXi hosts when using NFS v3 and NFS v4.1.

The next image also shows some limitations and supported features for both NFS versions.

NFS Benefits

One of the differences that we could check while managing iSCSI of NFS datastores is when we need to extend a Datastore. We see differences if it is an NFS Shared folder or an iSCI LUN volume.

In VMware, we can extend both without any issues. But in the destination(VMware environment), we need to do it differently.

While with NFS, you only extend on the source, and your ESXi hosts automatically pick up the new size(after a Datastore refresh), with iSCSI, you need to extend the Volume in your Storage and then do it in your vCenter/ESXi host.

When creating a VMware Datastore, there is no limit on the size. Since it is used as a Shared Folder, ESXi hosts don’t place a limit on the size of the Datastore.

The size limit of your NFS Datastore depends on the Storage vendor limits.

Using NFS v4.1, we can use authentication with cryptography to prevent unauthorized access to your NFS traffic.

NFS v3 doesn’t support multipathing, but if you use v4.1, you can implement a multipath to connect to your NAS array using NIC Teaming.

Easier to configure. NFS configuration in ESXi is creating a VMkernel and mounting the NFS Share.

When there is a power failure on your ESXi hosts or Storage, if using thin disks, it will be easier to recover from a power failure.

We can reduce the size of an NFS Datastore in an ESXi host by reducing the size of the volume NAS array. Since the ESXi host only uses the size it gets from the NAS array.

NFS Limitations

VMware doesn’t Storage DRS, Storage I/O Control, and Site Recovery Manager on NFS v4.1.

Note: The above features are supported using NFS v3.0(the other option when adding a Datastore on an ESXi host).

When using NFS v3, less security needs NFS v4.1 for better security, encryption, and better load balance by using multi-pathing.

To have a correct load balancing when using NFS v3, we need to create creating multiple paths to the NAS array, accessing some Datastores via one path and other Datastores via another.

Not possible to create RDM disks in ESXi hosts VMs, or use volumes to create Multi-server clustering.

I did a quick basic performance to show some data and performance on iSCSI and NFS.

Basic quick performance tests on iSCSI and NFS datastores

Using a Windows 2019 Server VM and some read and write statics.

First, run the VM on an iSCSI Datastore, move the same VM to an NFS Datastore and run the same tests.

Both Datastores are in the same Storage device and use the same Network Interfaces with MTU 9000.

ISCSI Datastore

Read: blocksize: 16384KB Average: 921.6 Mb

Write: blocksize: 16384KB Average: 844.8 Mb

Write+Read blocksize: 16384KB Average: 473.6 Mb

NFS Datastore

Read: blocksize: 16384KB Average: 908.8 Mb

Write: blocksize: 16384KB Average: 883.2 Mb

Write+Read blocksize: 16384KB Average: 435.2 Mb

This test was done with different(3) Windows disk performance tools. And all had the same similar results.

These results are similar to what I have seen in my experience when using both protocols in VMware environments. Even is not much, we see better performance when using iSCSI Datastores.

When I checked IOPS, I saw increased IOPS(10%) on VMs when using iSCSI Datastores.

Note: This is not an enterprise performance test but a simple basic test on a VM running Windows 2019. So we need to look at a simple basic test and should not be 100% proof of the different speeds between the two Storage protocols. Also, performance between both protocols depends on the Storage vendor.

Using this type of test in a Cluster VM or a Database VM could see different results.

Conclusion

As we can notice in the above article, there are some differences between iSCSI and NFS. How they are implemented, how network security is used, data flow between client and server, or how it is configured.

As I said initially, using iSCSI or NFS depends on your needs, environment, and most importantly, your Storage vendor. If you have an All-Flash Storage system, there is no point in using NFS(only if you have that special needs in your environment). You can use iSCSI with all its features and benefits.

In my experience with both protocols, overall, when we use iSCSI, we have a better performance overall. But what is best for one environment is not the best for another environment. Considering that, we need to check all the requirements, configuration, hardware, and configurations before deciding what the best option is.

Follow our Twitter and Facebook feeds for new releases, updates, insightful posts and more.

Leave A Comment